“Homo Sacer” is Latin for “the accursed man”, and a figure in Ancient Roman law, one who has been banned, or exiled, and may be killed by anyone, without penalty. The accursed man acquires their status from a number of unpardonable crimes, such as oath-breaking, which, since the oath invokes the Gods, invites any and all further punishments upon his head. The accursed man is literally an “outlaw”, in that he is outside the law, stripped of all rights and protections. Homo Sacer persists through medieval law in this form: only with the establishment of Habeas Corpus in 1679 does the “outlaw” cease to exist and all are guaranteed rights – in theory at least.

The Italian philosopher Giorgio Agamben takes up the figure in his work Homo Sacer: Sovereign Power and Bare Life. For Agamben, the exclusion of the accursed man is a defining feature of political life: in this exception is conceptualised the entirety of the law, giving it meaning and allowing it to be defined.

Have you met one of these yet? A hologram? It seems like many people have, and I keep running into them – in shops, at railway stations, at airports, hospitals, government buildings… The holograms are on a roll, infiltrating service and transit spaces with fixed grins and that appealing glow.

I’m fascinated by these holograms, by what they reveal about our reactions to technology, and by the expectations others have about that reaction. Holograms are just the latest shiny thing, designed to capture our attention for a few brief seconds in order to deliver a banal message (“please carry objects on the escalators”, “no sharp objects in hand luggage”) – messages which we’ve heard a thousand times before, and have learned to ignore. The hologram seems perfectly suited to some tasks (demonstrating to Kuwaiti shoppers how to brew tea) and spectacularly unsuited to others (advice to hospital patients). Joanne McNeil has written about the affective labour of these “workaholic holograms“, comparing them to Pret a Manger employees who have their emotional state written into their contracts, but, as the brochure notes, the hologram “works 24 hours, 7 days a week and never takes a break! No background check required!”

The hologram is the ultimate 21st Century worker: fully virtualised, pre-programmed, untiring, spectacular. People stop and photograph them, marvel at their uncanny glow, and even when this reaction is one of bewilderment and disorientation, it is enough: the message gets through. But the hologram is a blank: you can’t talk back to it, it is the final conversion of discourse into direct power. A recorded announcement which tells you that this is how it is, with a digital smile.

Digital technology obscures, in so many ways. It obscures at the interface level, by making complex tasks opaque behind seamless, glass-smooth interactions. And it obscures at the software level too, it is made of code, unreadable to most, inaccessible at source. Tap one button and our devices spin into life, communicating with distant servers, juggling tasks, presenting results, accomplishing things, in a second, in an instant, and we do not know how they do it. And this is fine when you just want to get on with your day, more concerning when that software has access to your personal contacts, or attempts to manipulate your state of mind.

The paradox of that invisibility, which I have been exploring for some time, is that while the digital defaults to illegibilty, it also renders that operation more legible to those who can read it, who do have access, because its logical nature, the nature of its operation, means it must be written down. Unlike previous forms of power, intention must be explicitly encoded into the machine. This intention can be hidden, but it’s always present. Neither good nor bad, nor neutral; invisible, but never wholly illegible.

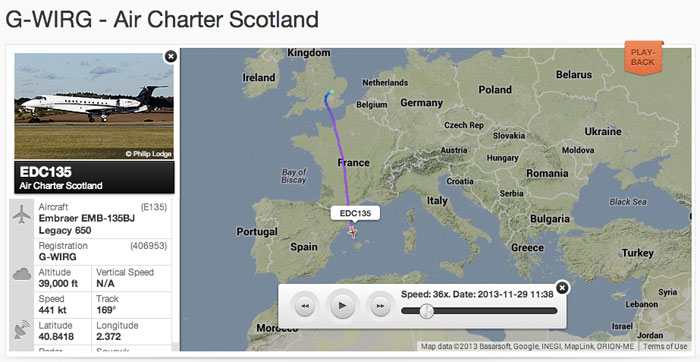

It’s this paradox that makes a project like Dronestagram possible: the use of one technological substrate tuned to openness (social media, digital mapping), to explore another designed for secrecy and obscuration (military classification and the drone war). Similarly, for all the deliberate obstructions placed in the path of activists supporting detained migrants, there are web sites explicitly designed to expose the same information, available to the public.

That work on immigrant detention, and the work of the Bureau of Investigative Journalism on citizenship has led me to think a lot about citizenship as a concept over the last few months. As ever, this is a way of understanding complex systems and conceptual frameworks which impact directly on culture and people.

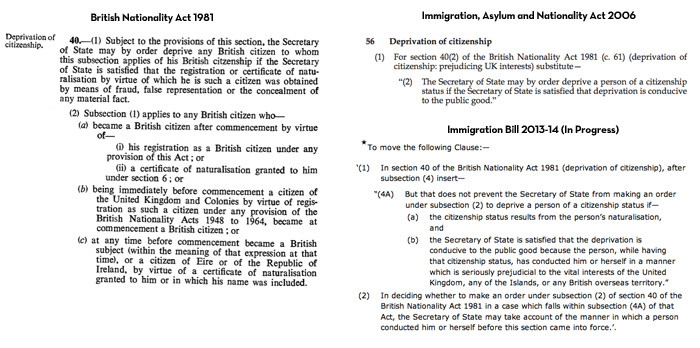

When I was researching my essay on ANPR and algorithmic surveillance, I was surprised by some of the explicit statements in the applicable laws which should have raised red flags with anyone reading it – if, indeed, anyone does read law outside the courts and parliament. For example, the Regulation of Investigatory Powers Act 2000, which covers surveillance by the Police in the UK, explicitly exempts vehicle surveillance from other restrictions on surveillance, clearing a space for exactly the sort of dragnet domestic surveillance of travel we’ve seen massively expand in the last ten years. A space had been deliberately hollowed out within a law which was supposed to regulate surveillance, in order to permit the opposite. It occurred to me that it was possible to do a kind of brass-rubbing on these laws to find those hollow spaces; to reverse engineer these frameworks to reveal their intent.

This approach emerges in part from technology itself, from computer science concepts of version control and changelogs, the ability to read back into code to understand how it was put together and came to be. Looking at the history of British citizenship law, much the same is possible:

Over the years, a document designed as an inclusive definition of citizenship has been overwritten to turn it into one whose main intent is to remove and exclude. Wording is adjusted to bring it into line with other directives, to make it interoperable. The phrase “seriously prejudicial to the vital interests”, for example, which appears in Theresa May’s most recent Immigration act, originates in the 1961 United Nations Convention on the Reduction of Statelessness – and is employed here, very specifically, to make somebody stateless. (Of course, this is how the law itself, a process of argument, works. A good example is the multiple rewritings of the Ports Circulation Sheet used to detain David Miranda at Heathrow. Wording matters – but, like code, it can be some pretty obscure wording.)

I was recently commissioned to produce a work for FACT in Liverpool, for an exhibition entitled “Science Fiction: New Death“, and for it I produced a hologram of my own. Entitled Homo Sacer, the hologram intones lines from various international agreements, treaties, national laws and public government statements. Beginning with the Universal Declaration of Human Rights’ 1948 directive “Everyone has the right to a nationality”, the monologue steps through the various laws which repeal this right, culminating in the text of the letter which stripped Mohamed Sakr – later killed by a drone strike – of his British citizenship, and the Home Office’s oft-repeated mantra “Citizenship is a privilege not a right”.

Installed in the lobby of FACT during the exhibition, the hologram walks visitors through the process by which a British-born citizen can be translated through various forms of identity, and ultimately reduced to the state of homo sacer, the accursed man, who may be killed at any time.

(I’ve written more about this legal conception of rights, citizenship, and the case of Mohamed Sakr, for the Walker Art Centre. You can read that essay on their web site. I’m also very grateful to Lucy Ellinson, whose role as a drone pilot in George Brant’s play Grounded has been widely acclaimed, for taking the role of the hologram, and to Heather Ross and Vanessa Hodgkinson for technical and artistic assistance.)

If we’re to understand the complex role which technology plays in shaping the world around us, we need a better understanding of complex systems in general, of other kinds of invisible but occasionally legible frameworks, like the law. And in turn, we can take what we have learned in the study of computation and networks and turn that augmented understanding back on the world around us, as a mode of analysis, and perhaps as a lever with which to shift it.

Comments are closed. Feel free to email if you have something to say, or leave a trackback from your own site.