TL/DR: I made an installation about random numbers for an exhibition curated by Arts at CERN. The first stop for the exhibition is Broken Symmetries at FACT, Liverpool, and the project has its own website.

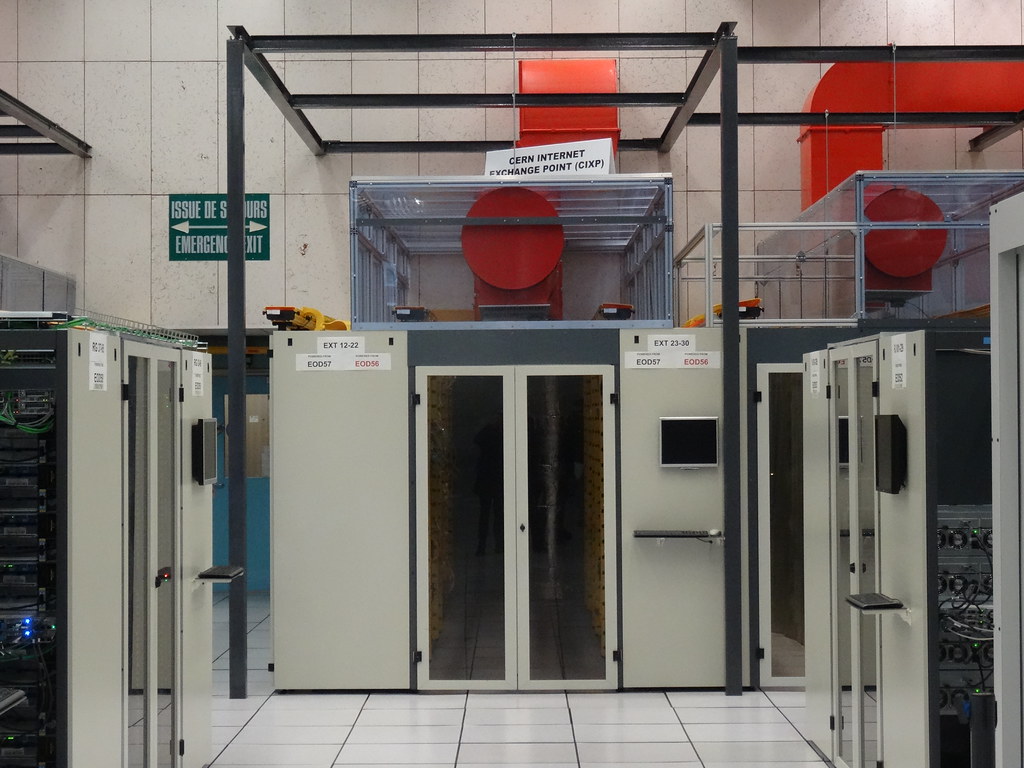

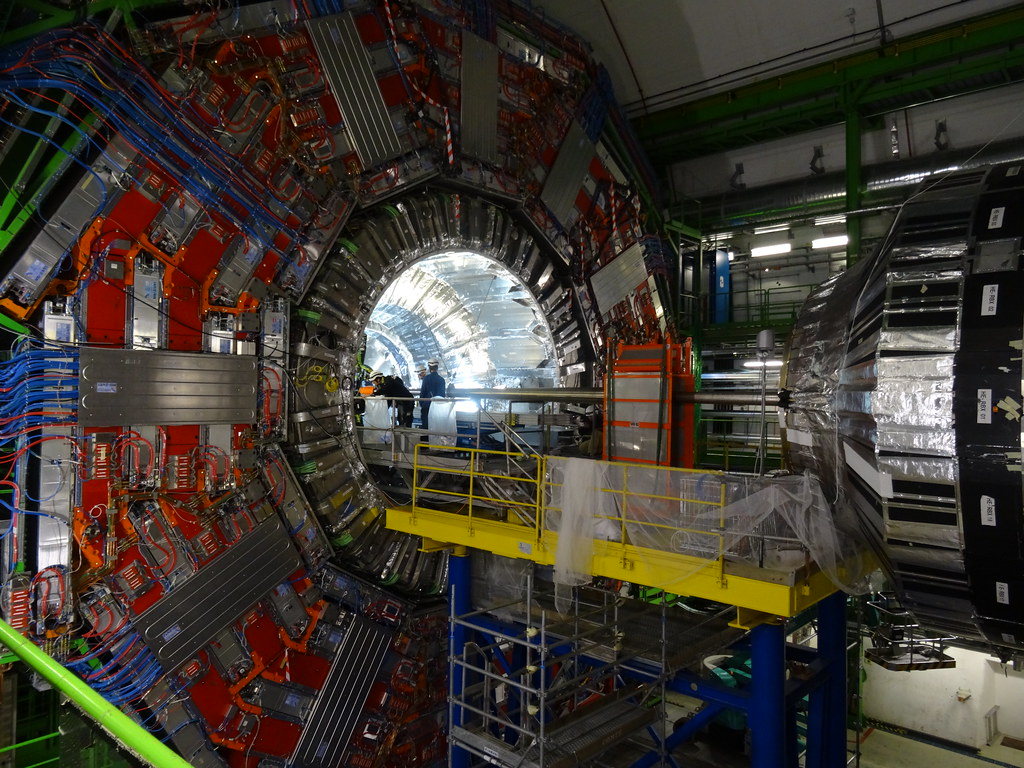

A couple of years ago I applied for the artist in residency programme at CERN, the research centre for supercolliders, bosons, dark matter and much else in Geneva, Switzerland (and France). I was lucky enough to receive an honorary mention, meaning I got to spend a couple of days there meeting physicists and exploring the facilities. I’d visited once back in 2012, which was very exciting, and it was even more extraordinary to go back a second time, to go down into the tunnels, and have actual discussions with people there. There are lots of photos from that second trip on Flickr: they’re mostly of very big machines, from the LHC instruments, ATLAS and the CMS, and of CERN’s datacenter, which is one of the most important scientific and network hubs in Europe, as well as being the home of the very first website.

Arts at CERN invites a number of people every year to visit, and different artists meet different people, but I was fortunate to spend a lot of time talking both to Mark Sutton, a physicist who works on the ATLAS trigger, and with several people from the Theory group. CERN is first and foremost an experimental research centre, and so its not uncommon to encounter people who work primarily on what are essentially computer science or mechanical engineering problems, but also have multiple top-level degrees in actual physics. The ATLAS trigger, for example, is an extremely complex system for determining which of the billion-odd proton collisions in the instrument are worth saving for later analysis – and as a computer scientist by training, understanding a little of how it works gives me a way in to understanding a little of what its investigating – but very few people would claim to have a full overview. And that’s OK.

CERN is primarily philosophical enquiry as heavy engineering: building some of the largest and most powerful machines in existence in order to investigate some of the smallest yet most fundamental interactions in the universe. As Karen Barad reminds us, quantum effects, often perceived as “small”, resonate everywhere. What happens at the quantum level defines everything. CERN is both vast research park and the (heavily contested) instantiation of a particular set of ideas about how the world is and should be, from a model of the universe to a model of collaboration to a model of thought, investigation, and invention.

I was particularly interested in what was happening within the Theory group at CERN – the collection of practitioners within the institution not focussed on constructing apparatuses to test existing suppositions, but to formulate new ones. My – limited and partial – understanding of what is occurring at present at CERN, and within particle physics research in general, is that the last decade or so of work has been incredibly productive, in that it has found and defined the Higg’s Boson and other particles expected by the Standard Model – but it has also not encountered many of the supposed particles which might allow us to consider the Standard Model definitive, or to map out reliable pathways beyond it. We have – as is often the case in scientific enquiry – learned more and opened up more questions at the same time. In particular, the events we might have expected to see if supersymmetry (SUSY) were a sure bet – a position many physicists have spent their entire careers working towards – have not materialised. And so we find ourselves in possession of vast amounts of fascinating and useful information, but no closer to that magical general theory of everything.

Which, to be clear, is great. There’s nothing that whets the appetite of humanities and science scholars alike more than unresolved questions, and this is, for those fascinated, the biggest one there is. All too often, such uncertainty is used as a stick to beat science and art: “but what’s really going on here?” “what does this really mean?” (“do you really need more money for this?”) – but it often feels – as became clear in a series of fascinating discussions at FACT a couple of weeks ago between artists and particle physicists – as if its only deeply involved artists and scientists who are capable of living productively with the deep uncertainties that are, ahem, the hallmarks of our age.

And so, to randomness. Or rather, to that which cannot be computed. Computational thought, as I attempt to outline in New Dark Age, is the kind of thinking which has settled, often unconsciously, into the belief that only that which is computable – which can be observed, recorded, modelled, and predicted – is true and immanent. And this is a useful way of thinking, but it is woefully insufficient for coming up with new ideas, for breaking with established paradigms – whether we’re talking about aesthetic representations, scientific modes, or social forms. Building only upon what our flawed and incomplete models tell us about what we already know about the world is what leads us, on the one hand, to theoretical dead-ends, and experimental failures, and on the other to biased systems that merely replicate the failures, power structures, and bigotries of the past in the present day, and cement them for the future.

Allow me a digression. In the ancient agora of Athens, in the earliest days of democracy, there was set up a device called the kleroterion. This was a machine for producing randomness in the service of the operation of the state. Under the original democracy, most of the important posts – huries, magistrates, legislators, and citizens councils – were chosen not by election, but by sortition – selection by lot.

The kleroterion was the device designed to facilitate sortition: the random distribution of power throughout the populace (limited, it should be noted, by the gender and class politics of the time). Citizens placed their pinaka – a kind of ID token – into slots in the machine, and the order with which a series of dice fell through a channel in the stone determined who would be selected for each role. (For more on this, read Julian Dibbells’s excellent Info Tech of Ancient Democracy.)

Sortition represents an interesting road not taken, or abandoned, for present debates about democracy. Surviving only into the present in the form of jury service and a few political experiments, recent research suggests that selecting people at random from the widest possible group produces better general problem-solving teams than choosing from a narrow group of supposed experts – a finding often summed up in the phrase “diversity trumps ability” – a notion which deserves a separate essay, and we’ll just leave there for now.

In addition to its function for facilitating sortition, I feel that the kleroterion, by design, performed another crucial function. It made the machinery of state visible and legible to the population: instead of technology rendering power opaque and inscrutable, it reified it as a device which all could inspect and judge, which stood in the central marketplace of the city, and could be understood and held accountable. It is reported that Pericles said of the process that: “It is administered by the many instead of the few; that is why it is called a democracy.” Opacity and inscrutability are not natural forms of technology, or politics: they are imposed by power, and can be undone.

Anyway. In the modern world, we use randomness more and more, and need more of it. Randomness underpins everything from scientific research to cryptography, providing the basis for both the exabyte-scale data analysis performed at CERN and the algorithms which ensure your credit card details aren’t stolen online. Randomness has been used throughout history for divination and games of chance, as well, as we’ve seen, for ensuring equality. But getting randomness is hard – it’s a serious computational problem, which undermines computational thinking at its core, because that which can be computed is, by definition, not random. (Let’s not get into definitions of randomness here, but again if you want more, a bit of Donald Knuth is a good place to start.)

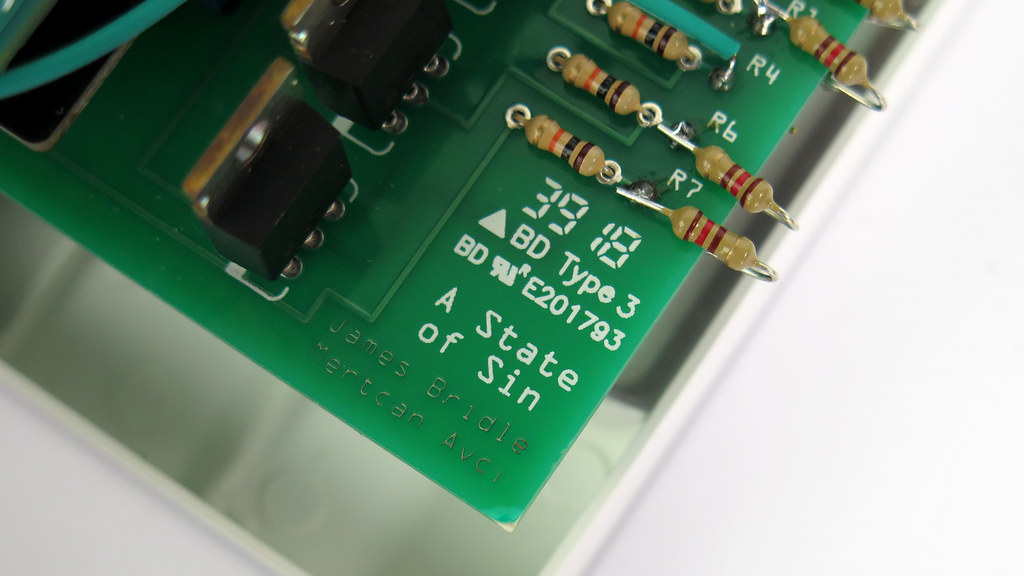

Back in 1949, the polymath John von Neumann, who was one of the key figures in the Manhattan Project and who first applied the Monte Carlo method for random sampling in computaters, famously stated that “Anyone who attempts to generate random numbers by deterministic means is, of course, living in a state of sin.” What he meant was randomness cannot be determined: it must be produced, found, sought out, discovered. Modern sources of random numbers include atmospheric noise, roulette wheels, and bouncing balls. In order to solve and safeguard some of the trickiest computational operations we perform, it’s necessary to go out into the world and sample true complexity from its chaos.

(Briefly, in reference to von Neumann, the endless, “peaceful” generation of random numbers staged in this work is also an attempt to overwrite the militaristic history of computation, from the Manhattan Project to the RAND Corporation’s Million Random Digits.)

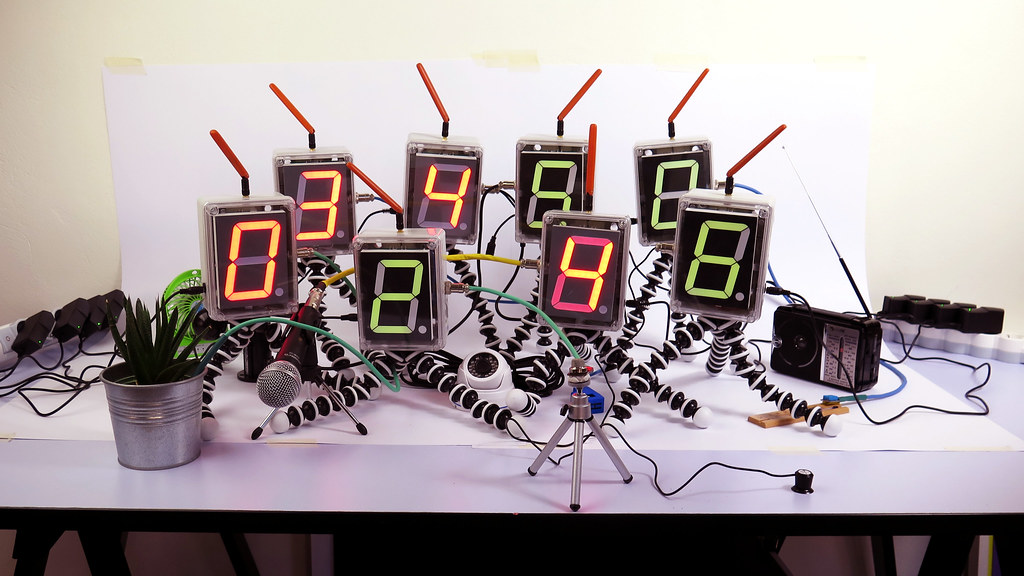

For the CERN commission, and the Broken Symmetries exhibition, I ended up building a series of random number generators – eight of them, in fact – each of which, embodied as a slightly shit robot, uses a different sensor to sample this randomness from the world. These robots were constructed over the summer in Istanbul – with the assistance of Mertcan Avci and the ATÖLYE prototyping lab – from essentially found materials in the city’s wonderful electrical, plumbing, tourist and everything-else-you-can-imagine bazaars.

Sound levels, lighting changes, gas concentrations, breezes, the buzzing of the power grid, heat, humidity and electromagnetic fluctuations – each of these provide the source – or seed – for generating an infinite sequence of new random numbers, a precious yet inexhaustible resource. These numbers are broadcast to, analysed, and made available on a dedicated website. (Where you can also read more about the process involved.)

Several of them are based on particular examples from history. Notably, I was inspired by Lavarand, a process for generating random numbers from the patterns in a lava lamp first implemented at Silicon Graphics and more recently deployed by Cloudflare to secure the modern internet. I’m also a big fan of ERNIE, which picked the numbers for the premium bonds, a UK lottery programme. ERNIE used the electromagnetic noise in fluorescent tubes to produce random numbers, just as one of my robots does – and was designed by Tommy Flowers and Harry Fensom at the Post Office Research Station, the same team that worked on the wartime Colossus code-breaking machines.

(Additional Art/Nerd aside: each robot contains an embedded SIM card – I used Hologram SIMs – designed for Internet of Things applications, making them totally autonomous and independent of gallery Wi-Fi etc. Like many of my works, they exist both inside the gallery and connected to a larger network, and I’d like to build them into a larger system of interacting, distributed artworks… one day.)

I describe this gang of machines as “hopeful robots”: simple autonomous machines venturing out into the world in order to learn something from it, and to use what they discover as fuel for new inventions. They stage the encounter between computation and the world as something chancey and contingent, but full of possibilities, as well as joy and humour.

Computation alone is insufficient: to learn, to discover, and to create – to think meaningfully in an entangled, interconnected, and networked world -we must engage, and trust in the noise and messiness of the world itself to assist us in those processes. This is as true of our politics and our social relations as it is of science and art.

The robots will be on tour to a number of venues around Europe over the next couple of years. I hope you get to meet them.

Comments are closed. Feel free to email if you have something to say, or leave a trackback from your own site.