This is the very lightly edited text of a lecture delivered as part of Through Post-Atomic Eyes in Toronto, 23-25 October 2015. Huge thanks to Claudette Lauzon and John O’Brian for the invitation to speak, and for organising such a fascinating few days. All the talks are online to watch for free. You can also download the image above under a CC license.

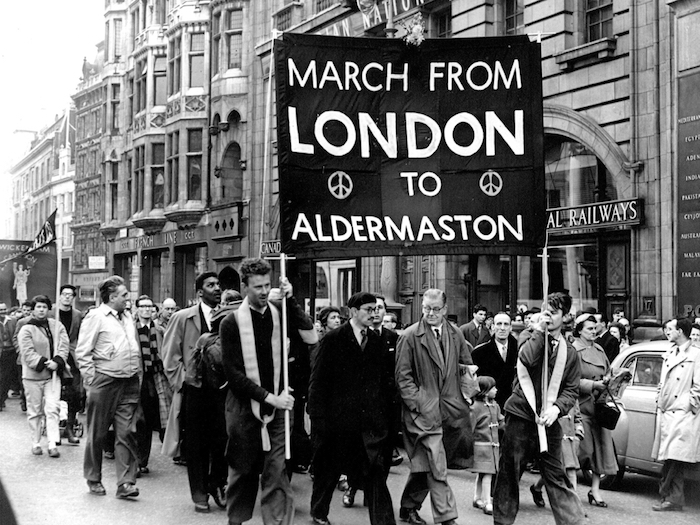

The Campaign for Nuclear Disarmament was formed in the UK in 1957, and in 1959 it began a series of Easter Marches from Aldermaston in Berkshire to the centre of London, calling on the British government to unilaterally disarm. These marches took place over several days, and attracted tens of thousands of people, from all walks of life – but particularly from the Left, the trade unions, and from religious groups.

In 1960, a number of senior CND activists decided that more direct methods than peaceful marches were necessary to capture the imagination of the press and public policy, and decided to embark on a campaign of nonviolent direct action. The resultant organisation was called the Committee of 100 – named for the hundred signatories on its founding document. One of their first actions was a sit-down protest by several thousand people at the Ministry of Defence in 1961 – led by Bertrand Russell, at centre here with his wife Edith, who had formerly been president of CND. The Committee of 100 maintained their nonviolence but over the years hundreds of members were arrested, and many imprisoned.

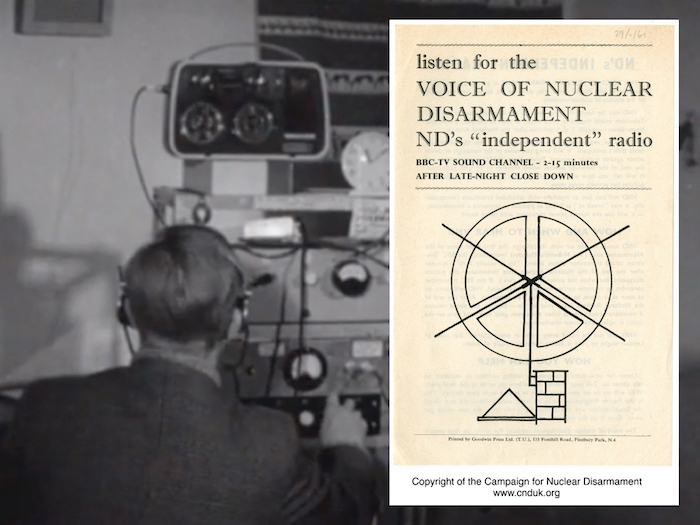

They also had some quite innovative projects, such as the Voice of Nuclear Disarmament. This was a political pirate radio station, broadcasting anti-nuclear speeches and songs (the songs were great by the way – there’s a great album of Scottish anti-nuclear songs on Spotify if you search for “Ding Dong Dollar“, my favourite of which compares the US Polaris missiles in the Holy Loch to the Monster in Loch Ness). The clever thing about the Voice of Nuclear Disarmament is that it broadcast in the TV audio frequency at a time when the BBC closed down for the night, so you’d get a picture of the queen and the national anthem, then the screen would go dark and this propaganda would start coming out of it. I don’t know of many modern hacks more elegant than that.

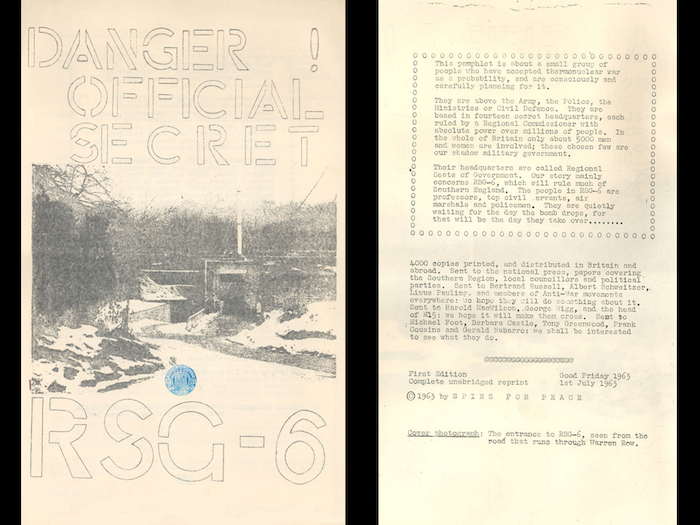

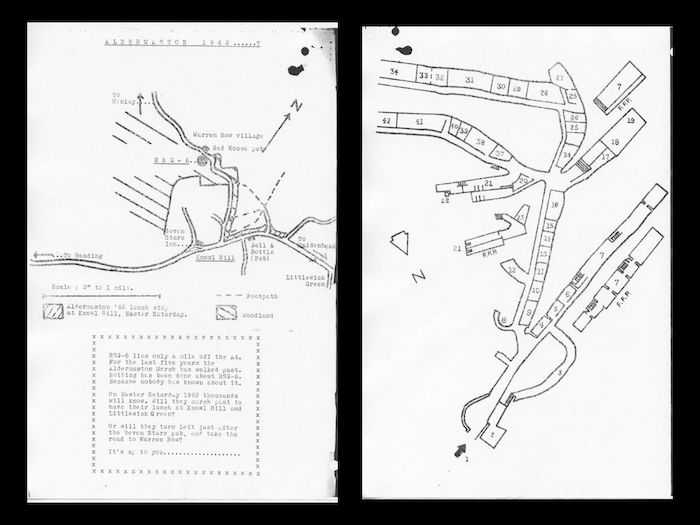

But there was another splinter group within the Commitee of 100, who didn’t think nonviolent sit-down protests were enough, and in 1963 five of them left London and travelled to Warren Row near Reading, which was the location of something called RSG-6, which was one of a nationwide network of bunkers built in secret by the government to govern the country following an explicit breakdown of society following the exchange of nuclear weapons. The five activists broke into RSG-6, photographed the buildings and copied down documents. They printed four thousand copies of this pamphlet containing everything they’d found and posted it to newspapers, politicians, universities and activists, under the name “Spies for Peace”. And then they threw the typewriter they’d used into a canal and disappeared. The identities of several of them are not known to this day.

The pamphlet was released just before the Easter weekend of 1963, and it included complete maps of the locations of the RSGs. RSG-6 was just a few miles from the route of CND’s Aldermaston march, and on the day hundreds of protestors broke away from the march and picketed the site. But the real damage was to the reputation of the government, and its public statements about nuclear war. Up to this point it had been stated publicly that a nuclear war was defendable and winnable, while secretly preparing for its devastating aftermath. This duplicity was unmasked by the Spies for Peace, and had an incalculable effect on changing the narrative around nuclear weapons – from a weapon of the state which was controlled in the service of the citizenry, to a weapon which was essentially uncontrollable, and which could be used by anyone, to destroy everyone.

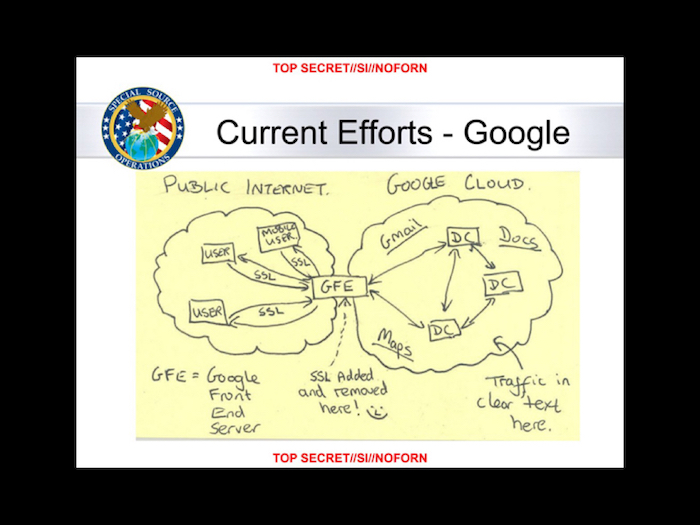

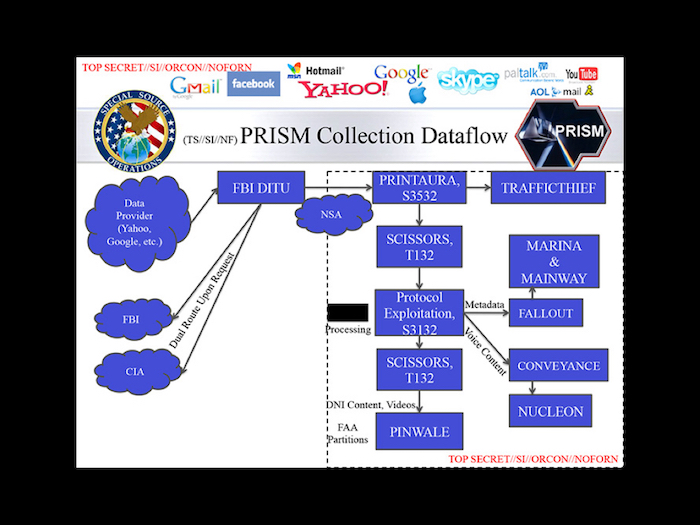

Thinking about Spies for Peace, I’ve been thinking a lot about whistleblowing in our current age. Like many, I have been fascinated and appalled by the revelations which have followed from the release of NSA documents by Edward Snowden. It’s important to note that a lot of this information was not new. If you’ve been following the computer security community for the past decade, or paid attention to previous leaks from people like William Binney or Mark Klein, the fact that something like this was occurring was almost inevitable, yet it took this particular release to bring it’s size and extent to the wider public consciousness. There was a quantity and visuality to the release itself which was sufficient to bring the attention of the world to bear on it.

However, I’d also argue that this effort has largely failed, or at least failed to produce the change in policy and in public attitudes which some of us might have expected it to. If you look at the situation we are in now, a couple of years after the Snowden revelations, most if not all of the activities which they uncovered have been, if not secretly authorised already, signed into law and continued without much fuss.

As Trevor Paglen has said: Wikileaks and the NSA have essentially the same political position: there are dark secrets at the heart of the world, and if we can only bring them to light, everything will magically be made better. One legitimises the other. Transparency is not enough – and certainly not when it operates in only one direction. This process has also made me question my own practice and that of many others, because making the invisible visible is not enough either.

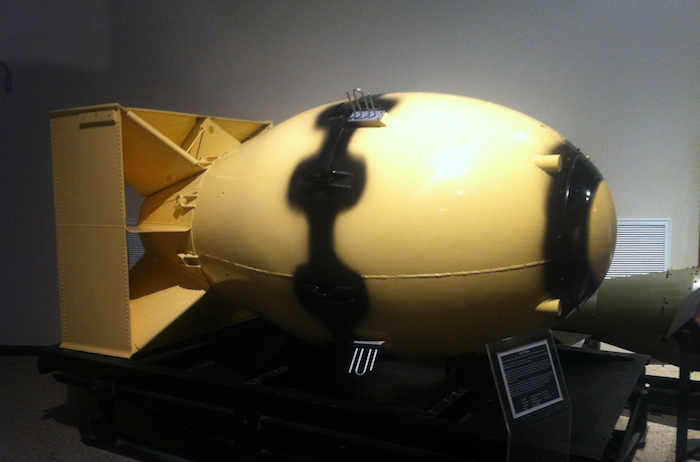

A couple of years ago I was on a wild goose chase trying to find the people who appear in CGI architectural renderings, and for reasons that are much too complicated to go into here, I found myself at the Museum of Nuclear Science and History in Albuquerque, which looks admirably science-y and education-y when you drive past it.

But it is basically a museum of bombs. And bombers. And missiles. And surface-to-air missiles. And cruise missiles. And hydrogen bombs. And ICBMs. And artillery shells. And backpacks. Basically, every single way you could deliver an atomic weapon. After a while, you start to feel kind of nauseous, and kind of blown away that we got through the twentieth century without, you know, actually getting blown away.

[For brevity and bandwidth, I’m leaving out a bunch of slides here which illustrate the above list, but for the full set of photos, see this Flickr set.]

These are the actual casings of two of the four bombs which fell on Palomares, Spain, in 1966, when the B-52 carrying them broke up in mid-air during refuelling. They didn’t fully explode, thankfully, although the conventional explosives in two of them did, causing extensive contamination of the local area, akin to a dirty bomb. This contamination is still being cleared today, and will be for some time.

That nausea is how I feel today – an existential dread not caused by the shadow of the bomb, but by the shadow of data. It’s easy to feel, looking back, that we spent the 20th Century living in a minefield, and I think we’re still living in a minefield now, one where critical public health infrastructure runs on insecure public phone networks, financial markets rely on vulnerable, decades-old computer systems, and everything from mortgage applications to lethal weapons systems are governed by inscrutable and unaccountable softwares. This structural and existential threat, which is both to our individual liberty and our collective society, is largely concealed from us by commercial and political interests, and nuclear history is a good primer in how that has been standard practice for quite some time.

From Albuquerque I drove a couple of hours to another place many of you are familiar with, the Los Alamos national laboratory. It sits across several flat mountain-tops in the high desert, and though we think of it as a secret and enclosed site, it was of course highly networked, because of its demand for computing power.

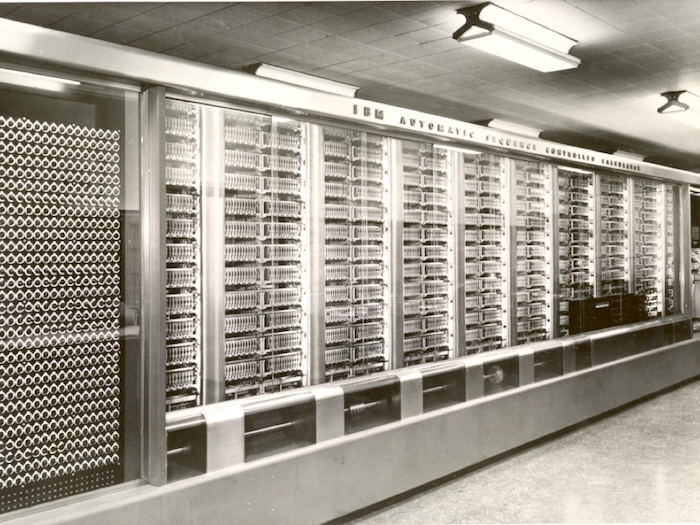

As the headquarters of the Manhattan Project, Los Alamos needed access to the most concentrated computing power of the time, much of which was located elsewhere, both during and after the war.

This was one of the most important machines they went out to use.

It’s Harvard Mark 1, which was an electro-mechanical machine built of both digital and moving parts. It ran a series of calculations in 1944 which were crucial to proving the concept of an implosive nuclear weapon, the kind used at Nagasaki. It has a particular spectacular appearance of its own because it’s casing was designed by Norman Bel Geddes, which is why it looks so self-consciously futuristic: Geddes is best known for the General Motors Pavilion, known as Futurama, at the 1939 New York World’s Fair.

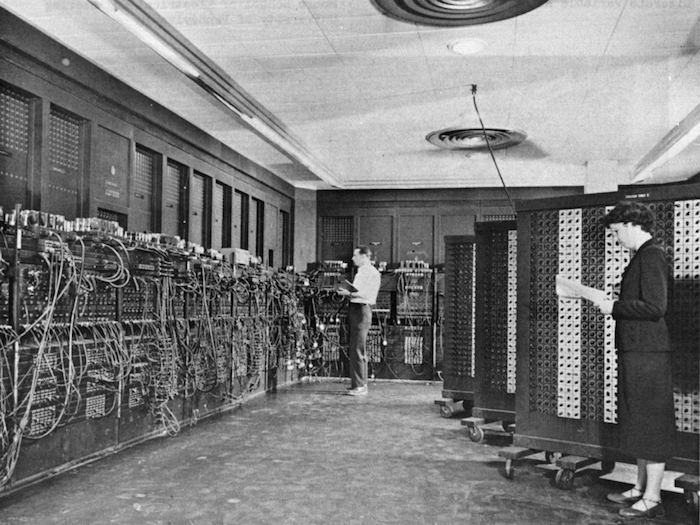

This is the first electronic general-purpose computer, the ENIAC, which was built at the University of Pennsylvania between 1941 and 1946. It was used extensively for Edward Teller’s early work on hydrogen bombs. The size of a couple of rooms, it had thousands of components and millions of hand-soldered connections.

The engineer Harry Reed, who worked on it, recalled that the ENIAC was “strangely, a very personal computer. Now we think of a personal computer as one which you carry around with you. The ENIAC was actually one that you kind of lived inside. So instead of you holding a computer, the computer held you.” I’ve always liked that because it seems to describe the world we live in now, living inside a giant computational machine, from the computers in our pockets, to datacenters and satellites, a planetary-scale network. Reed also wrote about how, if you understood the machine, you could follow the execution of a programme around the room in blinking lights – but this was a privilege of comprehension only a few enjoyed.

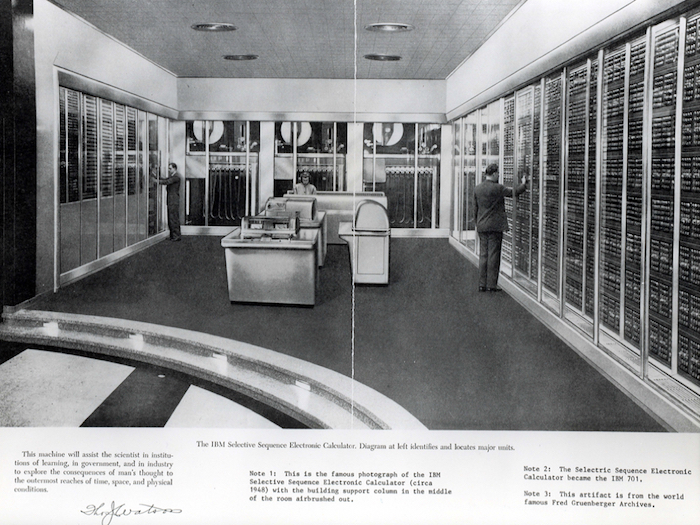

This one marks a kind of high-water mark for me of simultaneous technological visibility and inscrutability. This is IBM’s Selective Sequence Electronic Calculator (SSEC), the computer which became the IBM 701, completed in 1948 and housed in a glass-fronted former shoe shop next to their world headquarters on Fifty-seventh Street and Fifth Avenue in New York. Unknown to all the passersby with their noses pressed up against the glass the computer was employed to run a programme called HIPPO, which calculated hydrogen bomb yields. That’s the first full simulation of a hydrogen bomb detonation, being run on a computer in a public showroom on 5th Avenue. Visible, but not legible. Unparseable.

And this is where we are today – the virtual bomb site. This is a photo of IBM’s BlueGene/L supercomputer at Lawrence Livermore Laboratory, California, USA, used to design and maintain America’s nuclear weapons now that physical test explosions are no longer permitted. The photographer Simon Norfolk made this image as part of a series which documents supercomputers, but as part of a larger project documenting war and battlefields. The space within these machines is as much part of the battlefield as any tank or gun; it is a war machine, but it looks like any other computer stack.

And this is also where we are today, imprisoning an open architecture inside tiny inscrutable machines we’re not supposed to open. The history of computing is a military history, and an atomic history, and a history of obfuscation and inscrutability.

And this history is complicit in the surveillant present. This historic capacity and inscrutability has its parallel in a contemporary infrastructure, that of surveillance and data-gathering, an infrastructure which occupies a similar landscape: from the Los Alamos mesa to the Utah datacenter being built by NSA. The inscrutability of the machine co-produces the inscrutability of the secret state, just as critique of the state is shielded by the complexity of the technology it deploys. And it goes far beyond the secret state – this model of technology, of information-gathering, of computation, of big data, of ever-increasing ontologies of information – is affecting, destructively, our ways of thinking and reasoning about the world.

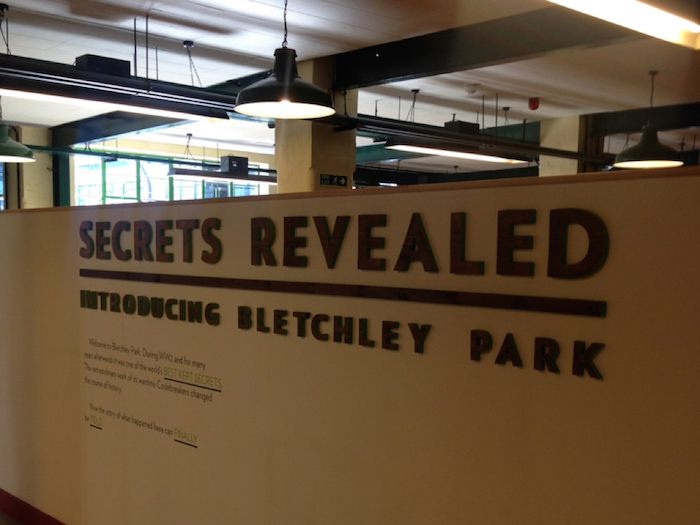

I went to another early computing site recently: Bletchley Park in the UK. This was the home of Britain’s wartime codebreaking efforts, most famously the successful operation to break the German Enigma encryption machine, but also a host of other cipher and surveillance breakthroughs. Bletchley Park is now a visitor attraction, a sort of austerity theme park where they host 1940s fashion theme days and exhibitions based on the movie of Alan Turing’s life.

As an attraction part-funded by GCHQ, it is depressing but unsurprising that Bletchley Park makes virtually no allusion to the post-war activities of those whose skills and techniques were developed here.

As the problematic associations of the exhibition title shown here perhaps demonstrates, I firmly believe that the other main reason that surveillance is tolerated – particularly in the UK – is do with a nostalgia for the patriotic efforts of codebreakers – that its history is part of the “good war”, with clearly defined enemies, and a belief in the moral rectitude of one side over the other, “our side”, which should be trusted with these kinds of weapons.

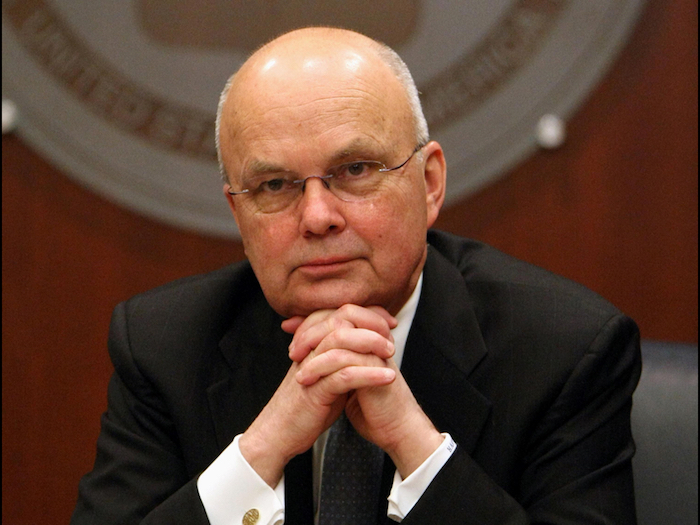

The one concession to the present at Bletchley is a small Intel-sponsored exhibition about cybersecurity, which is largely useless, but also unintentionally revealing. One of the talking heads it calls upon while advising visitors to always use a strong password when browsing online is Michael Hayden. That’s Michael Hayden, former director of NSA and CIA, who is famous in part for affirming that “we kill people with metadata” – an affirmation that data is a weapon in itself.

This thing we call BIG DATA is The Bomb – a tool developed for wartime purposes which can destroy indiscriminately. I was struck hard by this realisation at Bletchley, and once seen, it can’t be unseen.

I’m not the only one who has this sense either*. The phrase “privacy chernobyl” or “meltdown” has been deployed by the media on many occasions, most recently in reference to the Ashley Madison hack when the personal information of thousands of people was posted online for all to see, with little sympathy for the victims, even when they turned out to be just that, conned twice over, first by Ashley Madison’s marketing department, and second by its security team.

But when that data is the names and addresses of all the children in the UK, or an HIV clinic’s medical records, or all of a cellular provider’s customer data, it’s a bit more concerning.

[* While developing this talk, I also came across Cory Doctorow’s excellent unfolding of the radioactive-data argument, and, just recently, Maciej Ceg?owski’s Haunted by Data, which forced me to up my game somewhat.]

This data is toxic on contact, and it sticks around for a long time: it spills, it leaches into everything, it gets into the ground water of our social relationships and poisons them. And it will remain hazardous beyond our own lifetimes.

And while I can sound alarmist about this, and recognise I’m at the extreme end of attitudes to dealing with this issue, here’s the thing: I actually don’t think that these fears about data, storage and technology go far enough. I’m unsure about big data’s usefulness in the present and unconvinced by our capacity to deal with it safely and in the long term, but even more than that I think it’s damaging the very way we think about the world.

Just as we spent 45 years locked in a cold war perpetuated by the spectre of mutually assured destruction, we find ourselves in an intellectual, ontological dead end today. The primary method we have for evaluating the world: MORE DATA – is faltering. It’s failing to account for complex, human-driven systems, and its failure is becoming obvious. Not least because we’ve built a vast planet-spanning, information-sharing system for making it obvious to us. The NSA/Wikileaks example is one example of this failure, as is the confusion caused by real-time information overload from surveillance itself. So is the discovery crisis in the pharmacological industry, where billions of dollars in computation are returning exponentially fewer drug breakthoughs. But perhaps the most obvious is that despite the sheer volume of information that exists online, the plurality of moderating views and alternative explanations, conspiracy theories and fundamentalism don’t merely survive, they proliferate.

As in the nuclear age, we learn the wrong lesson over and over again. We stare at the mushroom cloud, and see all of this power, and we enter into an arms race all over again.

When what we should be seeing is the network itself, in all of its complexity. And when I talk about the network, I mean the internet and us and the entire context, because the internet is only the latest but certainly the most advanced civilisation-scale tool for introspection our species has built thus far. To deal with the internet is to deal with this infinite library and all the inherent contradictions contained within it. Our categories, summaries and authorities are no longer merely insufficient; they’re literally incoherent.

Our current ways of thinking about the world can no more survive exposure to this totality of raw information than we can survive exposure to an atomic core.

And I think this also approaches an answer to Susan [Schuppli]’s question earlier, which is what constitutes an ethics of seeing in the face of the history of atomic image-making, and I would suggest that one response is a refusal and an aniconism: a recognition that these images and image-making practices are also toxic, are radioactive, and need to be buried and surpassed.

[For Susan Schuppli’s work, see her website. The question was in response to an excellent talk from Joseph Masco about the complicity of photography in atomic history.]

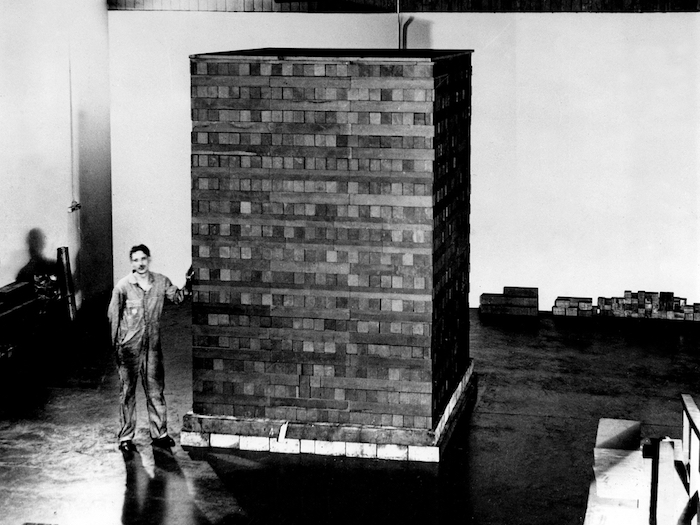

I want to leave you with two final images: the black stack of Enrico Fermi’s Chicago Pile Number One in the racquets court at Stagg Field, site of the first man-made self-sustaining nuclear reaction [this photo is of one of the precursor, or exponential piles] –

– and the cabinet noir or black chamber first inaugurated by King Henry IV of France in 1590, revived by Herbert Yardley in 1919 and given literal form by NSA and the architects Eggers and Higgins in 1986 at Fort Meade in Maryland.

The two chambers represent an encounter with two annihilations – one of the body, and one of the mind, but both of the self. We’ve built modern civilisation on the dialectic that more information leads to better decisions, but our engineering has caught up with our philosophy. The novelist and activist Arundhati Roy, writing on the occasion of the detonation of India’s first nuclear bomb, called it “The End of Imagination” – and again, this revelation is literalised by our information technologies. We have to figure out a new way of living with in the light of the technologies we’ve built for ourselves. But then, we’ve been trying to do that for a while.

Comments are closed. Feel free to email if you have something to say, or leave a trackback from your own site.